Whether you’ve greenlit them or not, AI meeting assistants are already in your organization.

From Zoom and Google Meet to third-party tools like Fireflies, Fathom, Otter, and tl;dv, employees are recording, transcribing, and summarizing meetings more than ever. More often than not, data security, compliance, or long-term risk doesn’t even cross their minds.

On the surface, it looks like a productivity win. But beneath that convenience lies a complex web of shadow IT, legal gray zones, and high-value data moving outside your control.

This guide outlines what every Head of IT must consider before meeting recording becomes business as usual: from consent workflows and encryption standards to red flags, legal landmines, and the tools worth trusting.

Let’s dive in.

Table of Contents

Security and Compliance First

When your team records a meeting, they’re generating a new dataset that may include customer information, IP, internal strategy, or even legally sensitive conversations. And like any data, it needs to be protected from the moment it’s created.

Here are several things that need to be kept in mind at all times.

Where is the Data Stored?

Most AI meeting tools are cloud-based, but that doesn’t mean they’re all equal.

Ask:

- Is the data stored in your region?

- Can you choose between local, EU, or US-based servers?

- Are they compliant with local data sovereignty laws?

- Do they provide private storage? (As in, does your data get stored with other organizations’ data, or can you have your own separate cloud storage?)

If a tool stores your meeting data in a country outside your regulatory zone, you could unknowingly breach GDPR, HIPAA, or APPI obligations. This is still the case, even if the conversation never left your Zoom call so make sure you double check.

Knowing where your data is stored is critical to keeping it safe. After all, you can’t keep your data secure if you don’t know where it is.

Encryption in Transit and at Rest

This one’s non-negotiable. End-to-end encryption ensures recordings, transcripts, and summaries aren’t intercepted or leaked, especially when shared across integrations like Slack or CRM tools.

Ensure your vendor encrypts both in transit and at rest, and ideally supports zero-knowledge architecture. This will keep your conversations safe from prying eyes,

Regulatory Compliance

At minimum, tools should be able to demonstrate compliance with:

GDPR (for EU-based companies and customers)

HIPAA (US healthcare)

SOC 2 (information security standards)

APPI (for Japanese-based companies and customers)

If they can’t provide documentation or a compliance statement? That’s your first red flag.

In 2023, 82% of cloud data breaches involved misconfigured or poorly secured cloud assets. Meeting recordings are no exception. They’re the most likely type of data to be breached, so any Head of IT had better ensure their team is using a safe and compliant tool.

Consent Isn’t Optional

The legality of recording varies by country and state. In the U.S. alone, 11 states require two-party consent, meaning everyone on the call must agree to be recorded. That consent needs to be:

- Visible (recording notifications)

- Auditable (timestamped proof)

- Automated (so employees don’t have to manage it manually)

Red flag: Any tool that starts recording without prompting participants or storing consent records puts your legal team at risk, especially if recordings are shared externally or used as evidence.

In addition to the word of the law, it’s just plain good manners to ask for somebody’s permission before recording them. Just as you wouldn’t (I hope) start following someone around and recording their conversations in person, you shouldn’t secretly record them online either.

If you still need convincing, read our other article about why recording without consent is a bad idea.

Shadow IT and Unapproved Tools

Want to hear an uncomfortable truth? I’m going to tell you anyway: even if your IT team hasn’t approved a meeting assistant, your employees have probably already invited one into the room.

From sales reps logging notes in Fathom to project managers using Jaime for summaries, AI meeting assistants are easy to set up, often free to start, and widely recommended across LinkedIn, Reddit, and sales Slack groups.

And that makes them a classic shadow IT threat.

The Scale of the Problem

- 65% of SaaS apps in enterprises are unsanctioned. Often, users sign up with personal emails so it’s outside of IT’s visibility.

- These rogue tools account for 30–40% of IT spend in large organizations, draining budgets and increasing risk.

- Nearly 50% of all cyberattacks now stem from shadow IT.

Source: Zluri.

This type of shadow IT includes AI note-taking apps that:

- Store sensitive recordings on unmanaged servers.

- Lack enterprise-grade access controls.

- Auto-integrate with tools like Gmail, Slack, or Notion without oversight

There are plenty of tools that don’t explicitly ask participants for permission, nor make it clear that they’re recording in the first place. Tools like Granola, Jaime, Tactiq, and Notta can all record without a single person knowing, increasing the risk potential and making it difficult to prevent.

Why It’s Happening

Most AI meeting tools prioritize ease of use. That’s great for productivity, but it means they often skip formal onboarding, legal reviews, or IT approvals.

Employees just want help taking notes. What they don’t realize is that they may be violating company data policies, sharing confidential information with third parties, or uploading recordings to servers outside your compliance zone.

This is all bad news, but it can be ameliorated with good ol’ fashioned education.

What IT Can Do

Visibility and governance are paramount. Nobody wants to enforce bans on specific tools. That may actually increase people using hidden notetakers. Instead, start by:

- Creating a short, approved list of vetted tools.

- Monitoring usage through SSO or CASB solutions.

- Educating teams on what’s at stake and how it affects them.

In a 2024 Dashlane report, 39% of employees admitted using unmanaged apps, and 37% of corporate apps lacked single sign-on protections. This presents a major security weak point.

Shadow IT isn’t going away. But with the right controls, you can turn it from a risk into a competitive edge.

Tool Evaluation Checklist

There’s no doubt that AI meeting tools are here to stay. They’ve already revolutionized how we take meeting notes, and they’re in the process of revolutionizing how we actually use those notes (think automatic CRM syncs, AI agents, follow-ups, and assigned tasks). The real question is: which AI notetakers belong in your stack — and which ones don’t?

In a 2023 Zerify survey, 97% of IT professionals expressed concern about video conferencing data privacy, while 84% feared a breach would result in loss of sensitive IP. That’s why every IT leader should perform a tool evaluation to make sure they’re compliant, useful, and secure.

Here’s what every Head of IT should look for when evaluating a meeting assistant, whether it’s tl;dv, Fireflies, Otter.ai, Fathom, or any future platform your team is tempted to try.

1. Integration Depth (Not Just Breadth)

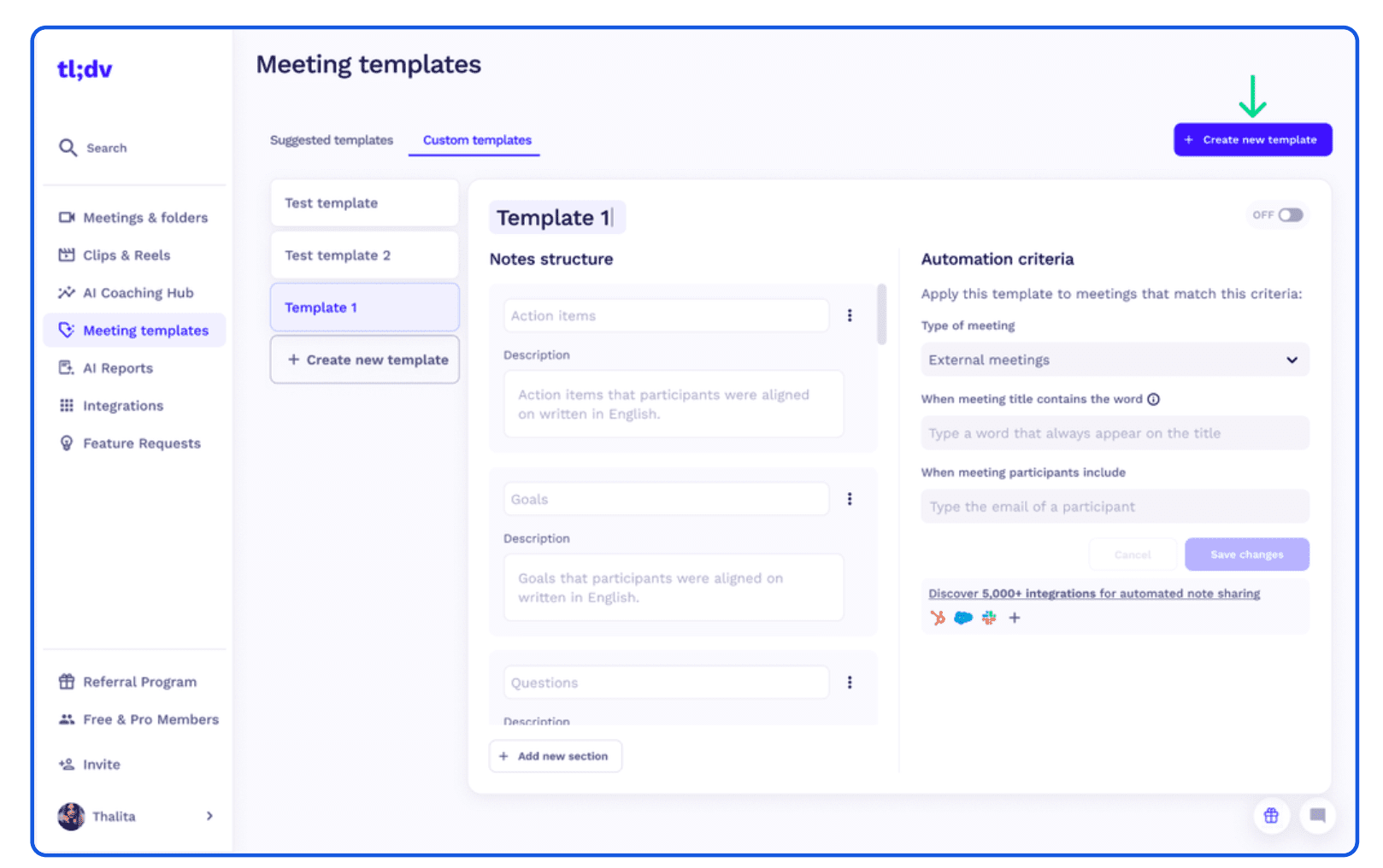

A lot of tools celebrate the amount of integrations they have. This is obviously a good thing. The more tools it integrates with, the more likely your tools are included and setting up workflows becomes ten times easier. tl;dv, for example, integrates with over 5,000 tools, including hundreds of CRMs, project management tools, and work collaboration platforms.

However, how deep do these integrations go? One super deep integration between tl;dv and HubSpot goes a lot further than twenty shallow integrations between tl;dv and 20 different CRMs. For tl;dv, it’s a good job we have both.

But in general, IT leaders should be asking:

- Does the tool integrate with your core systems like CRM, project management, cloud storage?

- Can you control how those integrations behave?

- Is it read-only, write-capable, or bi-directional?

A good tip is to avoid tools that sync data without giving you visibility or version control. tl;dv, for instance, lets you create meeting note templates so that your meeting notes are organized exactly how you want them to be. These can then be automatically synced with CRM systems to fill in the exact fields you need. It’s not just a copy/paste job, but an efficient way of filling out exactly what you need without any manual hassle.

2. Admin Controls and Permissions

Another thing Heads of IT should look out for is admin controls and permissions. Ask:

- Can you manage users via SSO, SCIM, or role-based access?

- Is there an admin dashboard with analytics, usage insights, and tool-wide visibility?

- Can you disable or restrict access to recordings and transcripts at a team or domain level?

If there’s no admin panel, you get no control. That’s an instant risk.

With tl;dv, admins are given full control. Not only can they auto-record and auto-share all their team’s conversations (even when the admin themselves isn’t present or even invited), they can also prevent deletion. What that means is a sales manager can prevent their reps from accidentally (or intentionally) deleting calls. This saves your team from accidental data loss while also stopping malicious actors.

3. Custom Data Retention Policies

More questions for IT leaders to ask regarding custom data retention policies:

- Can you set default retention timelines (e.g. auto-delete after 30/60/90 days)?

- Can you apply different policies to different teams (e.g. Sales vs Legal)?

Organizations with strict compliance needs (especially in finance, law, and healthcare) need full control over data lifecycle, not just a blanket “delete all” button.

Most enterprise-scale solutions provide more flexible data retention policies, but it’s something that’s definitely worth checking out. You don’t want to sign on without one.

4. Audit Logs & User Activity Tracking

One subtle thing that drastically helps IT heads choose the right tool is whether or not they can track user activity. This helps IT see which features are being used, which aspects of the tool are providing the most value, and which ones aren’t getting used at all. It’s a great way to monitor what needs more education, onboarding, or incentive, as opposed to what can be scrapped altogether.

Think about these questions when choosing a tool for your team or organization:

- Can you see who accessed what, when, and what actions they took?

- Are you alerted to unauthorized access or data exports?

If the platform offers exportable logs for security audits, even better!

5. Export, Delete, and Download Rights

Nobody wants a tool with no option to export or download. If the recordings are trapped inside the platform, they’re not actually that useful. Always be sure to check out the export, delete, and download rights before signing your whole organization over to a restrictive plan.

- Can you download transcripts and recordings in structured formats (e.g. JSON, CSV, MP4)?

- Can you fully delete meeting data, not just “hide” it from the UI?

This becomes critical in the case of right-to-be-forgotten (GDPR), employee exits, or legal takedowns.

The right platform should make compliance easier, not harder. And it should work with your security stack, not around it. This checklist is your frontline defence against any potentially harmful tool.

Employee Training and Usage Guidelines

Even the most secure tool in the world can’t protect you from a poorly trained user.

Your team might know how to record meetings, but do they know when they should, how to disclose it, and what happens to the data afterward?

As a Head of IT, your role isn’t just to approve tools, it’s to set the standards for how they’re used responsibly. Essentially, you’re responsible for making sure everyone in your organization knows how to use these tools without risking data and privacy. This is especially important when you’re dealing with sensitive customer data too.

Set the Ground Rules

Include guidance on:

- When it’s appropriate to record (e.g. internal meetings vs client calls)

- Which platforms are approved

- Who owns the recording — the user? The company? Legal?

- How recordings and summaries can (or can’t) be shared

One of the quickest and easiest ways to do this is to publish a short “Recording Policy Cheat Sheet” and bake it into onboarding for new hires and managers.

Teach Consent as a Best Practice

Even in one-party consent regions, build the muscle of active transparency:

- Always notify participants that recording is on

- Use tools that display clear visual indicators (e.g. banners, alerts, verbal prompts)

- Reinforce respect for off-the-record conversations or sensitive moments

Employees using free tools that don’t show visual or audio consent warnings are a massive red flag that need to be dealt with early. This is even more true on client calls where you risk leaking sensitive information about people or businesses outside of your own.

Training Matters

The end-user is often thought to be the weakest link in the security chain with over 90% of security incidents involving human error. Additionally, 30% of breaches involve internal actors, whether malicious or simply unaware.

In other words: your tool isn’t the problem. Your least-trained employee is.

To fix that, employees need training, onboarding, and best practices to follow. The good thing about AI meeting recorders is it becomes easy to share how to use them. You can use the tool itself to train others.

Make Responsible Use the Default

Whenever possible, automate best practices. You can use tools that prompt for consent and offer auto-delete defaults to limit data hoarding. Similarly, by providing templated disclaimers that employees can copy and paste, you can prevent a lot of these problems from arising.

However, a good tool will already ask for permission from other meeting participants on its own. You won’t need to lift a finger.

A simple example might be: “This meeting is being recorded to support note-taking and internal documentation. Please let us know if you’d prefer not to be recorded.”

The more frictionless you make good behavior, the more likely people are to follow it.

That’s why tl;dv sends emails in advance of meetings to gain permission. Some tools that join midway through offer a pop-up to announce they’re recording, but they don’t provide much in the way of rejection. If you don’t like it, you just have to leave the call or ask the admin to stop.

The Legal Landscape

Recording a meeting isn’t just a technical action, it’s a legal one. And depending on where your participants are located, it could land your company in serious hot water if handled incorrectly.

This is where many teams — especially globally distributed ones — get caught off guard.

Consent Laws: One-Party vs Two-Party

- In the U.S., 38 states follow one-party consent laws, meaning only one person on the call needs to know it’s being recorded.

- But 11 states (including California, Florida, and Pennsylvania) require two-party (or all-party) consent.

- In the EU, under GDPR, explicit consent is typically required for recordings, especially if personal or identifying information is captured.

- APPI (Japan) and PIPEDA (Canada) impose similarly strict standards for privacy and disclosure.

If your tool starts recording automatically, without prompting for or capturing consent, you may be violating the law without knowing it.

Are Transcriptions Legally Discoverable?

Yes. In many jurisdictions, recordings, transcripts, and AI-generated summaries can be considered discoverable in court proceedings or internal investigations.

That means:

- Any inaccuracies in AI summaries could be problematic

- Data must be stored securely and retrievable when needed

- You may be required to delete or produce specific transcripts under legal obligation

While not exactly the same, Sam Altman, founder of OpenAI just admitted something in the same ballpark: anything you say to ChatGPT can and will be used against you in court.

AI Summaries ≠ Legal Records

While many tools now auto-summarize meetings, summaries are interpretations, not facts. If your teams rely on these as sole “records” of the conversation, it could lead to disputes, especially in legal or HR-sensitive matters.

Make sure your employees know:

- AI summaries are helpful, but not definitive

- For compliance-critical meetings, the original recording and transcript are what matter

What IT Should Do

- Ensure all tools support region-aware consent mechanisms

- Store a consent audit log with timestamps for each meeting

- Educate teams on the legal discoverability of transcripts and recordings

- Default to more transparency, not less

Remember: compliance isn’t just about ticking boxes, it’s about protecting your company’s reputation, employee trust, and legal footing.

Performance, Storage, and Cost Considerations

AI meeting assistants might feel like “set-and-forget” tools, but under the hood, they can quietly accumulate massive data loads, storage costs, and performance liabilities.

As Head of IT, you’re not just responsible for approving the tool, you’re responsible for what happens when it scales.

Who Owns the Data: You or the Vendor?

This isn’t always clear. Some vendors retain rights to store or process your meeting data indefinitely, especially under free-tier agreements. Always ask:

- Is your meeting data portable?

- Can it be exported in usable formats (e.g. MP4, TXT, JSON)?

- Can you revoke access or request deletion across all systems?

Make sure you look for vendors that offer Data Processing Agreements (DPAs) and clearly define data ownership boundaries.

With a bit of digging, you can generally find what you’re looking for online. If not, most companies will tell you during a sales call. If they don’t know, or choose not to answer, that’s a sign that you should be looking elsewhere.

How Long are Recordings Stored?

- Are there default retention periods?

- Can you set custom expiration policies per team, region, or use case?

- Does the platform allow automated clean-up or flagging of stale data?

Long-term storage of video files and transcripts can quietly bloat your cloud usage. Even worse, it could leave your sensitive data lying around for years.

Hidden Cost Traps

Free plans often come with invisible costs, especially when you think in terms of security and privacy.

- Limited transcription minutes

- Capped integrations (e.g. CRM sync only for paid tiers)

- Per-user or per-recording overages

If you’re scaling usage across departments, ensure your chosen tool:

- Offers enterprise pricing tiers with volume flexibility

- Doesn’t introduce sudden per-seat fees for downstream features like admin access, SSO, or retention

Always keep in mind that the average cost of a data breach hit $4.45 million in 2023, up 15% in just three years. Skimping on secure storage or proper deletion isn’t a cost-saving, it’s a liability. With this stat in mind, it might make you think twice when considering a cheaper tool just because of its price.

Performance Under Pressure

Headcount isn’t all that matters when it comes to scalability. Your AI assistant needs to perform consistently, even when 20+ reps are recording calls at once. Consider:

- Does the tool handle simultaneous recordings across large teams?

- Is transcription real-time or delayed under load?

- Do integrations slow down when linked with heavy platforms like Salesforce or HubSpot?

The takeaway? Don’t just look at how a meeting tool works, look at what it costs, what it stores, and how it scales. Because at enterprise level, small inefficiencies multiply fast.

Quick Reference:🚩Red Flags🚩to Look Out For

Before you approve (or inherit) any AI meeting assistant, here’s a quick checklist of red flags that should immediately raise concerns for any Head of IT:

🚩 No encryption policies

If the platform doesn’t clearly state how it encrypts data in transit and at rest, assume it doesn’t.

🚩 Lacking consent workflows

No automatic consent prompts? No visible recording indicators? No audit trail? That’s a legal liability waiting to happen.

🚩 Data stored outside your region

Defaulting to U.S. or international servers without letting you choose violates data sovereignty and may breach GDPR or APPI.

🚩 No admin dashboard or user visibility

If you can’t see how the tool is being used across your organization, or control access by team, role, or region, you’re flying blind.

🚩 No data deletion or retention settings

If transcripts and recordings are stored “forever” by default, you’re building up long-term compliance debt.

🚩 AI summaries used as final records

Summaries are helpful, but they’re still interpretations. Relying on them without backing them up with raw transcripts can lead to misrepresentation, especially in disputes or investigations.

🚩 No exportability or portability

Can you extract data in usable formats if you decide to switch platforms? If not, you’re locked in — or worse, at the vendor’s mercy.

🚩 Free plans without a clear data policy

If it’s free and fast to sign up, it’s likely your employees already have. That means your data might already be sitting somewhere outside your security perimeter. This one can only really be solved with education and perhaps an anonymous tech audit.

Use this list as your internal sniff test. If any tool triggers one or two (or more) of these red flags, consider it unsafe.

What Every Head of IT Should Do Next

AI meeting assistants aren’t going anywhere. And that shouldn’t be a nightmare, even for IT leads. In fact, it’s a good thing.

They can streamline workflows, improve customer engagement, and eliminate the drudgery of manual note-taking. But they also introduce a new class of risk: invisible tools capturing sensitive conversations, storing them who-knows-where, and operating well outside your governance frameworks.

The solution isn’t to block them. It’s to own the rollout.

Here are five simple steps you can act upon from this article:

Create an approved list of recording tools

Vet platforms for security, consent handling, storage location, and compliance certifications (GDPR, SOC 2, HIPAA, etc.).Establish company-wide usage guidelines

Define when recording is appropriate, what must be disclosed, and how recordings/transcripts should be shared or stored.Standardize consent workflows

Make consent prompts and audit trails non-negotiable, even in one-party consent jurisdictions.Set clear data policies

Automate deletion timelines. Segment access by role. Track everything with audit logs.Train your teams and make it easy to do the right thing

Equip employees with templates, disclaimers, and visual cues. Choose tools that make compliance effortless.

The rise of AI meeting tools is an opportunity for IT to lead. Tech adoption is one thing, but data stewardship is even more critical, and it’s becoming more and more vital as the years go by.

The right recording policy protects your people, your data, and your reputation.

So before your team hits record, make sure IT hits pause and sets the rules.

FAQ for IT Leaders: Key Questions Before Approving Meeting Recordings

Is it legal for my team to record meetings without consent?

It depends on where your participants are located.

In the U.S., 11 states require two-party (all-party) consent.

In the EU, GDPR generally requires explicit, informed consent.

In Japan, APPI enforces strict data handling laws.

Best practice: Always use tools with built-in consent prompts and audit logs.

Even if it isn’t a strict legal requirement in your jurisdiction, it’s still a good idea to ask permission before recording somebody (under any circumstances).

What are the biggest security risks with AI meeting assistants?

The main risks are:

Shadow IT (unauthorized tools bypassing IT governance)

Recordings stored in non-compliant regions

Poor or missing encryption

No admin visibility or data controls

These can lead to data breaches, non-compliance fines, and legal exposure.

Can AI-generated summaries be used as legal records?

Not reliably. AI summaries are interpretations, not transcripts. They may misrepresent or omit critical details and should not be used as the sole source of truth in legal or HR matters.

What should I look for when evaluating a meeting assistant tool?

Start with these essentials:

Data encryption (in transit + at rest)

Region-aware consent workflows

Admin dashboard with usage visibility

Role-based access controls

Data retention, deletion, and export capabilities

SOC 2 / GDPR / HIPAA / APPI compliance

How can I reduce the risk of employees using unapproved tools?

Educate, don’t punish.

Create an approved list of vetted tools

Use SSO/CASB to monitor app usage

Share clear policies and onboarding guides

Explain the risks in simple, real-world terms

Are meeting recordings considered “sensitive data”?

Absolutely — they often contain:

Personally Identifiable Information (PII)

Intellectual Property (IP)

Strategic internal discussions

These fall under most privacy regulations and should be treated like any other sensitive data source.

Who owns the meeting data — the employee, the tool, or the company?

Ideally, your company does, but only if:

You’ve signed a proper DPA (Data Processing Agreement)

The tool allows data export and deletion

You’ve explicitly outlined ownership in your internal policy

How long should recordings be stored?

Only as long as necessary.

Best practice is to define default retention policies (e.g. auto-delete after 30/60/90 days) and customize based on team (e.g. Sales vs Legal). Long-term storage = long-term risk.