In June 2025, OpenAI launched a feature called Record Mode. This is an AI Meeting Assistant, built directly into ChatGPT’s desktop app for Team users. It records your voice, transcribes your meeting in real time, and gives you a summary. One of our founders, Rapha, delved into this tool very early in its launch and you can check out what he thought here.

I, however, as something of a power user of ChatGPT, wanted to go a little deeper into the whole “ChatGPT for meeting notes” conversation.

I come from an editorial and sales background, I work in the AI space, and let’s be honest, I have a borderline parasocial relationship with ChatGPT at this point.

It’s my brainstorm partner, my outline machine, my private notepad, and yes, sometimes my shopping list dumping ground. It has legitimately helped me pick out the last three birthday presents for my partner. We go deep.

So when a built-in meeting recorder suddenly appeared, I got curious.

Not just about what it does, but about what it assumes, and how it handles a conversation.

Because I also use tools like tl;dv (the best meeting assistant, lol), other meeting assistants, and I know what’s involved when you’re recording real conversations, with real people, about real things.

And the more I tested ChatGPT’s Record Mode, the more I found it a bit… unsettling. Not because it’s bad… it’s actually quite slick, simple and intuitive.

But because of what it quietly skips:

- Privacy

- Data retention

And the fact that you’re handing over your voice, with very little control or traceability.

Eeek! I’ll delve into that a bit more, but first, let’s get the lowdown in a TL;DR. If you just want to know how to use ChatGPT for your meeting notes now that Record Mode exists, you can skip straight to that section by clicking here.

TL;DR: Is ChatGPT Record Worth Using for Meeting Notes?

OpenAI’s new ChatGPT Record Mode makes it possible to use ChatGPT as a basic meeting assistant by recording, transcribing, and summarizing audio right inside the desktop app, but it’s currently Mac-only, tied to a paid Team plan, and lacks key business features like speaker tags, structured storage and compliance controls.

It’s useful for simple transcripts but still falls short of purpose-built tools for teams like tl;dv. The biggest drawback of ChatGPT Record Mode is that it has been authorized to keep your data… permanently.

Best for: Individuals or small teams who want quick, lightweight meeting transcripts and summaries inside ChatGPT without setting up a dedicated AI meeting assistant.

Avoid if: You need reliable speaker attribution, searchable meeting libraries, basic compliance with GDPR, or integrations with tools like CRMs, project management, or knowledge bases. Also avoid it if you don’t want your data to be stored indefinitely against your will.

Verdict: ChatGPT’s Record Mode is decent, but for regular team use and real workflows, purpose-built meeting assistants like tl;dv remain the more practical choice.

How to Use ChatGPT for Meeting Notes: The Basics

To get set up with ChatGPT for meeting notes what you will need is:

- A ChatGPT Team subscription

(That’s $25/user/month, billed annually, and yes, you need at least two seats. No solo sneaking in.) - The ChatGPT desktop app for macOS

(Record Mode is Mac-only at launch. Windows and web not supported… yet.) - An unlocked workspace with Record Mode enabled

(Admins can toggle the setting, but there’s no fine-grained control yet.)

I downloaded the app, created a new Team, and got set up in under 5 minutes. ChatGPT happily took my card, and that was that: I was in!

There was no onboarding for Record Mode, no banner, no announcement. Just a quiet little grey mic icon in the chat bar.

Clicking it opens a small recording interface (very similar to Notion’s AI meeting assistant). It records your voice, captures system audio, and logs anything around your mic. You speak. It transcribes. When you hit stop, it summarises. You also have the option to pause it on the interface.

Overall, it’s fairly simple.

The notes then took a minute or so to generate and you can then treat the output a bit like a question-and-answer session.

You can then scroll through the Canvas, ask ChatGPT questions about what was said, like you would in any other chat. It’s clean, clear, and in typical OpenAI style, feels deceptively low-key.

So, Do You Get A Transcript?

Yes, the transcript exists… technically. But it’s not exactly smooth to manage. There’s no clear download option, no structured summary you can export. It just sort of… hangs around in your chat thread like a weird dream you only half-remember. And without any video, or audio, to play back it’s hard to identify what it ended up as.

I asked ChatGPT for specifics a few times and got a mix of responses:

- One time, it gave me a rough snippet of what I said

- Another time, it spat out what looked like placeholder code, mixed in the notes from the AI

- Once, it referenced something called “Hive,” which made me wonder if I’d accidentally summoned a backend integration or just confused it entirely.

That’s when I started questioning how well it actually understands what’s happening. So I tested it a few different ways:

- I spoke out loud in full stream-of-consciousness mode

- I opened a YouTube clip of Gollum

- I had a normal conversation with someone in my house, while ChatGPT was still recording

I wanted to see what it picked up, what it ignored, and whether it could tell the difference between my voice and someone else’s.

Here’s what happened….

It Got… Weird

The first thing I did was record myself talking to… well, myself. Just stream-of-consciousness rambling, thinking aloud.

ChatGPT’s summary picked it up as a two-person conversation. It recognized distinct voices and perspectives, even though it was literally just me, switching tone mid-thought. Slightly unsettling, but fascinating.

I thought then, “OK then, this might not be very good.”

The second test I undertook was playing a YouTube clip of someone talking to themselves to see if I could replicate this. Specifically, I played a clip of Gollum from Lord of the Rings, that iconic back-and-forth where he’s arguing with himself. I wanted to see if ChatGPT would get confused again or spot the duality.

It, however, got smarter.

While it didn’t explicitly say so, it recognized the voice as Gollum, and while it didn’t straight out say “This is a clip from Lord of the Rings”, it did reference it when pushed and correctly identified the tone and character.

Weirdly, it seemed slightly irritated that I’d tried to trick it.

I felt like the summary had this passive-aggressive, “nice try” energy, like it knew I was testing it. It almost presented the notes like I was studying the film and offered to help me talk about the themes involved.

I will say however, in spite of all that context, it still called “hobbits” “hobbils”. Gollum has a fairly strong accent, and it obviously struggled with that.

Next, I tried a conversation within my household, specifically with my child (who I did ask permission beforehand to do so).

In our very brief conversation, we talked about consent and what it means to record someone, and whether doing that in public without telling anyone is okay.

The result? OpenAI’s Recorder:

- Identified me by name, even though I hadn’t given it during the recording… or even during the entire time interacting with this new account. And it wasn’t attached to the account as it was the shortened version of my name…

- Identified speaker 2 as a child

- Made an assumption that we had a parent/child relationship based on our word use (which wasn’t word use that was specific to those roles)

- Ran a form of sentiment and relationship analysis on the tone and conversation

All of that, from a brief conversation in my office, which is interesting… but also worrying.

Because within that conversation we had talked the bigger picture.

About cafes, public places, and what happens if someone starts using this feature out in the world, on their laptop (or maybe even later on their phone), in a meeting, at a talk, without telling anyone. And all of that conversation was logged, analyzed, and stored as part of the summary. With no obvious way to delete it permanently. And no obvious way of gaining permission.

Now, to be clear, other meeting assistants could technically do this too. But the difference here is that this one is plugged directly into the ecosystem of OpenAI, a company with extraordinary access, reach, and legal ambiguity.

That’s what changes things.

EDITOR’S NOTE: Wait… Am I Even Supposed to Be Using This?

Just before I get into the whole data privacy thing, here’s where things got even weirder!

I’m based in the UK, and, according to ChatGPT itself, I shouldn’t actually be able to use Record Mode.

I’m not using a VPN. There are no hacks. I’m just here using a regular Team subscription on a Mac.

When I was fact-checking this piece, I asked ChatGPT about regional availability, and it responded (very cheerfully, helpfully) that Record Mode isn’t currently available in the UK or wider EEA due to GDPR concerns.

Which is… awkward. Because I had literally just used it. On the same machine. In the UK.

Now, maybe that’s a hallucination on ChatGPT’s part. Or maybe it’s a rollout inconsistency. But either way, it’s strange. When the tool itself tells you that what you’re doing shouldn’t be possible, you start to wonder where the edges are.

Who has access? Who shouldn’t? And how many people are using features that technically haven’t even “launched” in their region? Especially ones that are being held back due to “legal” reasons.

It’s a reminder that we’re dealing with systems that are constantly shifting, sometimes faster than their documentation can keep up. And when that system is also recording your voice, summarizing your tone, and making assumptions about who you are… that should give us all pause.

Where Does All That Data Go?

At the same time OpenAI launched its new recording feature, there has been a lot of talk online about a recent court order that changes how your data is handled.

In May 2025, a U.S. federal court issued an order that requires OpenAI to keep everything. That includes chat logs, API requests, temporary sessions, and deleted conversations.

This came out of an ongoing copyright case brought by The New York Times and other publishers who believe the evidence of content misuse might live inside deleted user logs.

So, what does that mean in practice?

Even if you’re using ChatGPT in temporary mode, or if you hit delete on a conversation and you’ve turned off training in your settings, OpenAI still has to retain that data BY LAW.

OpenAI has objected to the order. They’ve cited privacy concerns, data minimization, and the fact that this may not comply with international regulations. But while that objection is being reviewed, the data is still being held.

This affects everyone. Not just U.S. users. If you are based in the UK or Europe, Asia Pacific, Africa, South America you are likely expecting a level of data protection that is no longer guaranteed. Things like GDPR give people the right to have their data erased. That is not something OpenAI can currently honor.

If you’re recording meetings inside ChatGPT, whether for convenience or because it’s built into your workflow, this should matter. You are not just using a tool, but are feeding a system that is under direct legal scrutiny, and you may not be able to get your data back out.

Now Let’s Talk About ChatGPT Record’s Biggest Risk

Tools like Record Mode look simple. They sit quietly in your app, transcribe what’s said, and turn it into something neat. But after a while, it becomes clear you’re not just capturing notes. You’re capturing tone, emotion, intent, the shape of a conversation, not just the words.

You are harvesting human emotions, insight, your ‘essence’… which sounds a bit much, but these are the things that make us human.

If you’re recording something informal that turns into something important later, or just getting thoughts down that involve more than one voice, it’s not always obvious where that data ends up. What starts as a convenience can quickly turn into something harder to trace or manage.

Other meeting tools can store sensitive conversations, but most are built for that use case. They’re more upfront about what’s happening, who can access it, and what gets saved.

This isn’t about taking a stance against AI. I work in the space. The company I work for builds a meeting assistant that uses AI, and yes, we store data too. But we design for that purpose. Consent is part of the experience, and the infrastructure is built around clarity and user control. (Which again, for the record was a footnote when setting up the system… there are no prompts to remind users in Chat GPT that you need to get consent).

Before working in AI, I was a journalist. I wrote for national and international outlets and spent years navigating legal nuances. This included issues such as defamation, misattribution, and unauthorised data access. So when I see something that records a conversation, stores the output as a transcript, and offers no clear audit trail or proof, I start to question what that means.

For example, if a quote is pulled from a meeting summary, and there’s no way to confirm it ever happened, does it still count? If someone is recorded without knowing, and that data lives on, who is responsible for it? And if that content ends up feeding a system that generates outputs for someone else, is that just a feature… or something else?

Can you be sued for something that the AI said you said, but were misheard? Are you libel if there’s no way proving if you did, or didn’t? If there’s no video to back that up, no secondary check, what happens?

These aren’t just hypotheticals. They’re starting to look like the reality we’re stepping into.

So, Should You Use ChatGPT for Meeting Notes?

If you’re already using ChatGPT on a daily basis, and your meetings are personal or relatively low-stakes, Record Mode will likely be useful. It’s simple, fast, and the summaries are clean. The fact that it’s built in makes it incredibly easy to default to.

But if your meetings include clients, team decisions, strategy, or anything sensitive, it might be worth pausing before you reach for that record button.

Despite how it’s presented, Record Mode isn’t specifically designed JUST for meetings. Instead, it sits inside a much larger system that’s designed to respond to almost anything you throw at it. That flexibility is what makes it SO powerful, but it also makes it hard to know what’s being kept, how your words are being processed, or what the system is learning from them.

It’s easy, and it’s backed by this noble idea of making “AI accessible to all”, but it’s hard to see where your data goes or how long it stays there.

And we are still only at the very beginning of what this is capable of.

Even without the inevitable land grab from big tech, the foundations of this are already strange. I even made a joke during testing with colleagues that Google and OpenAI are starting to feel like the Autobots and Decepticons from Transformers, two massive forces arriving on Earth to fight for dominance, destroying everything in their path while we all stand around wondering whether we’re supposed to cheer or run.

That might sound dramatic, and maybe even a bit childish, but if you’re handing over your voice, your context, your thoughts, and your conversations, it matters who is listening. And it matters what they are allowed to do with it. At the moment, it’s unclear who that is, and it’s also unclear who that person, or persons, will be in the future.

Alternatives to ChatGPT Record

If you aren’t happy with ChatGPT’s record mode, then there are plenty of dedicated, privacy-focused AI meeting assistants that you can use instead.

The following three tools are ideal if you want something that is more secure than ChatGPT Record, and has the bells and whistles of a specialized meeting note taker.

1. tl;dv

tl;dv is a German-based AI meeting assistant that is compliant with GDPR, SOC2, and the EU AI Act. It gives the user (you!) power over data retention, unlike ChatGPT.

Additionally, tl;dv labels speakers so that your transcript actually reads like a transcript and not a raving lunatic’s stream of consciousness. It goes a lot deeper than ChatGPT ever could, too. For instance, it has a meetings library so you can quickly and easily browse all your recorded meetings in one place. It also records video and audio so you can play it back and make sure the AI isn’t hallucinating (something that’s impossible with ChatGPT).

If you’re looking for an AI meeting assistant for business, then tl;dv excels here too. You can take a big batch of sales calls, for instance, and then get multi-meeting insights that delve into recurring themes and patterns across multiple calls at once. You can make clips and highlight reels to make sharing important insights quick and easy, rather than a drag.

Finally, tl;dv can sync with custom fields in your CRM to fill them out automatically after each and every call. No more manual CRM work. It also offers sales coaching, sales playbook monitoring, objection handling tips, and much more.

You can get started with unlimited transcriptions for free.

Note: You can read a detailed tl;dv overview on TheBusinessDive, where the tool was independently tested and evaluated.

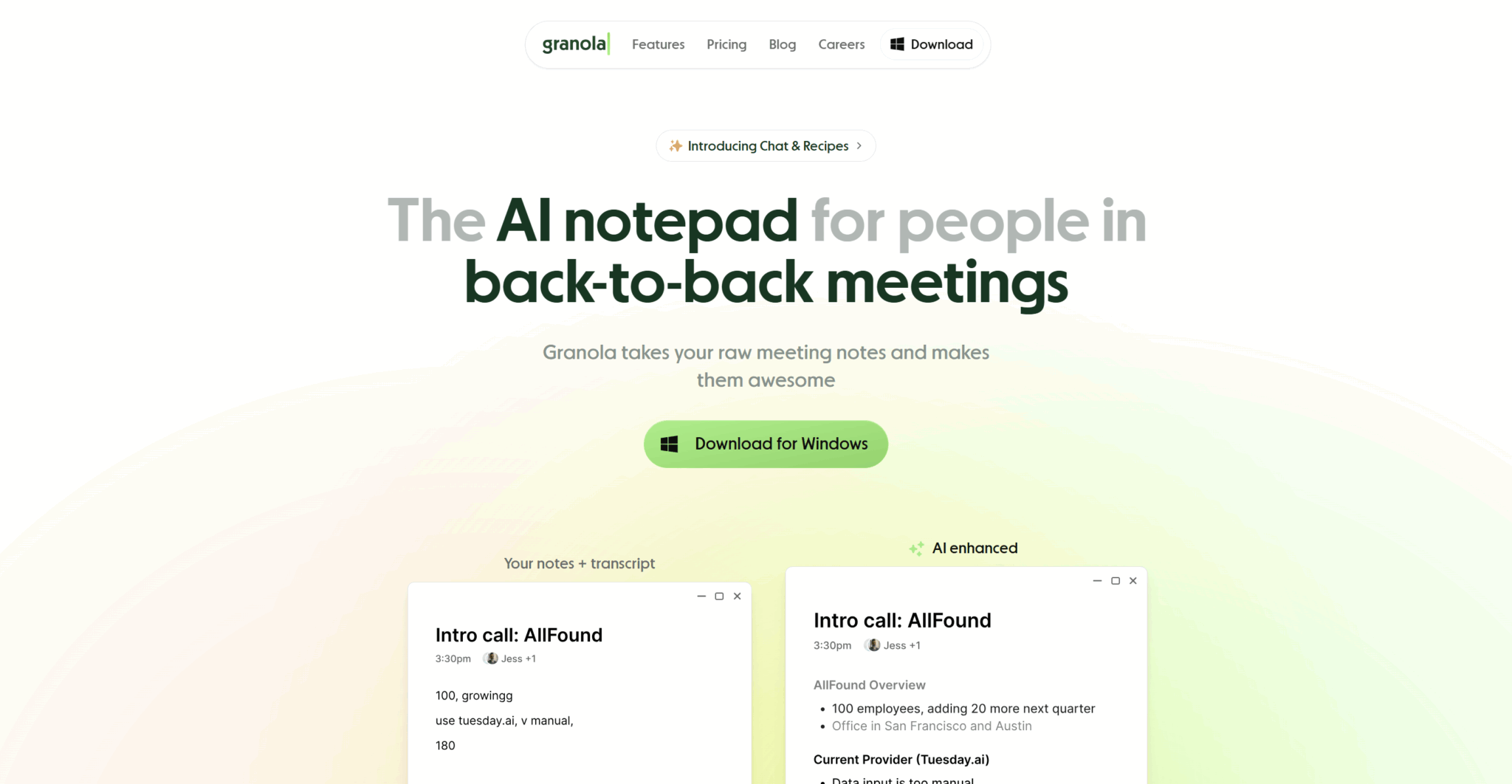

2. Granola

Do you prefer how ChatGPT doesn’t join your meeting as a participant? Or view the stream of consciousness-style transcript as a positive because you’re not technically recording anything? Granola might be the right tool for you.

Similar to ChatGPT, it is bot-free and each meeting takes place inside a notepad-style interface where you can ask the AI questions about the call directly. It’s easy to use, can record from any and all video conferencing platforms, and has a good free plan.

Granola lacks the advanced features of tl;dv, but it’s a better user experience than ChatGPT Record, that’s for sure.

3. Fathom

Fathom is another solid AI notetaker, especially for individuals. While it does have a Team plan, the Individual plan is largely free, including integrations! This makes it a great place to start if you’re looking for something that’s GDPR compliant and SOC2 compliant instead of ChatGPT Record.

Like tl;dv, it records video and audio so that you have a meetings library with all your calls stored there. It’s great for individuals or small teams that have dozens of calls per month.

It offers high quality meeting notes and accurate transcripts, but it lacks the sales features, multi-meeting intelligence, or EU AI Act compliance of tl;dv.

I Still Really Like Chat GPT

And I will still use it. I will continue to be a “Power User” and pay my $$$ for my Pro account, HOWEVER, like any tool, I am wary about what I put into it. I need to build in checks and audits, I won’t be uploading my DNA to it (legit people are doing this), and I will continue to happily use tl;dv for my meeting notes.

So, while Chat GPT for meeting notes might end up being amazing, it still feels like handing every detail of you, your voice, your personality and more, to a masked stranger.

For meetings, I use tl;dv. It gives me control. I know what’s being recorded, how it’s stored, and what I can do with it after. It may not be backed by the giant engine of Open AI, but it is built for this.

And you can try it free.

FAQs About ChatGPT Record

What is ChatGPT Record Mode?

It’s a feature in the ChatGPT desktop app that lets you record up to 120 minutes of audio, transcribe it in real time, and generate a meeting summary with timestamps and bullets.

How do I record a meeting with it?

Open the ChatGPT app and tap the Record button.

Give the app access to your mic (and system audio if needed).

Speak naturally. The tool transcribes as you go.

Tap Send to generate an editable summary and transcript.

Do I need to pay to use it?

Yes. You must be on a ChatGPT Team subscription (a paid plan) and use the MacOS desktop app for Record Mode to appear.

Does it train AI on my calls?

OpenAI claims that recordings are deleted and that the chat is not used to train its AI models. However, they do offer an opt-in service.

Does it store audio recordings?

OpenAI claims raw audio is deleted after transcription, and transcripts are stored according to workspace settings.

Can I use it in every region?

There have been reports of inconsistent regional availability. For example, it sometimes appears even where it’s not officially supported.

Does it support speaker identification?

No. It transcribes all voices into a single stream without labelling who said what.

Can I jump to specific moments in a recording?

The transcription includes timestamps, but there’s no video or audio playback tied to those timestamps inside ChatGPT yet.

Is there a central library of meetings?

No. Summaries live in individual chat threads rather than a searchable, shared repository that all team members can browse.

Are there privacy or compliance tools built in?

There’s no automated consent flow or audit logs. Record Mode assumes users handle consent externally.

Should I use it for sensitive meetings?

Caution is advised. Because there’s no built-in consent prompt, audit trail or detailed data governance, it’s better suited to low-stakes notes than confidential business calls.